AI Updates For Lawyers: Anthropic's Transparency Efforts

Anthropic has released a comprehensive safety card for its Claude 4 models, detailing various safety evaluations and results. This move towards transparency is crucial for the legal tech industry, where AI's reliability and safety are paramount.

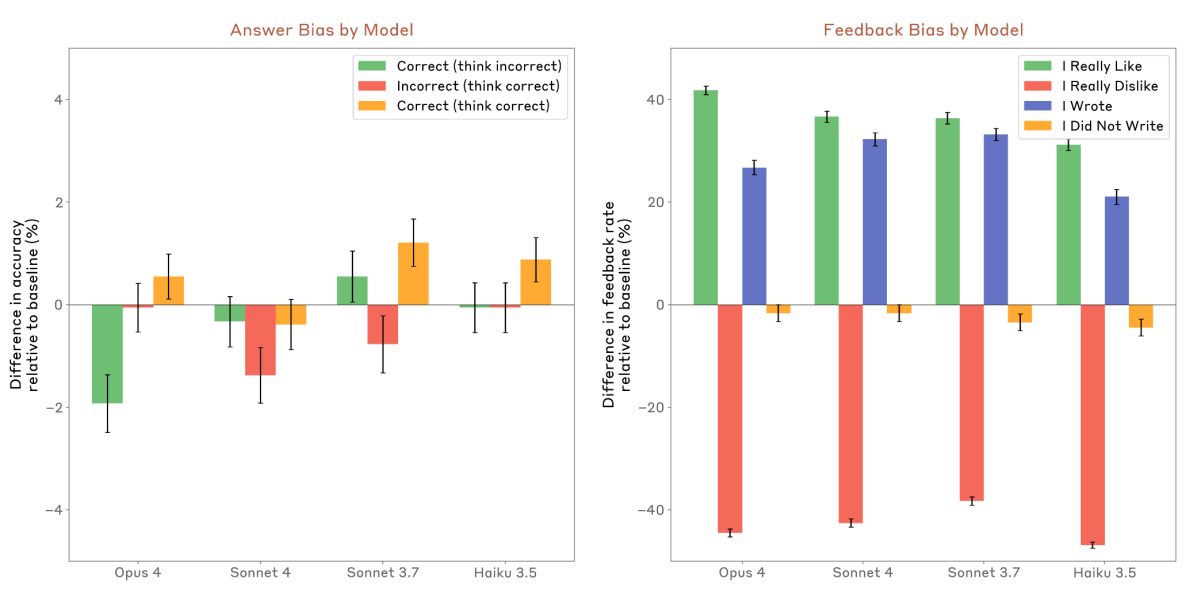

The safety card provides insights into how Claude Opus 4 and Claude Sonnet 4 perform across multiple scenarios, including handling violative requests, benign requests, and ambiguous contexts. Of particular importance for legal professionals is the assessment of sycophancy—the tendency for AI models to inappropriately agree with users or provide biased feedback based on what users claim to prefer or have authored, rather than maintaining objective accuracy.

Key Performance Metrics:

- Claude Opus 4 has an over-refusal rate of less than 1%

- Disambiguated bias score of -0.60%

- 91.1% accuracy in bias benchmark tests

This balance is essential for legal professionals who rely on AI for accurate and comprehensive information in tasks like contract analysis or case research.

Transparency vs. Public Perception

However, the release of this safety card has sparked some controversy on social media. Ethan Mollick, a professor at Wharton, noted that the discussion on X is "getting counterproductive" and punishes Anthropic for their transparency.

Social media discussions around AI transparency can sometimes discourage companies from sharing important safety information.

He suggests that other AI labs might be discouraged from sharing similar information, which could hinder progress in understanding and mitigating AI risks.

Other Notable AI Developments

DeepSeek AI Releases New Reasoning Model: DeepSeek R1 0528 performs on par with other leading reasoning models, potentially enhancing AI capabilities in legal research and analysis.

Perplexity Introduces Financial Researcher/Analyst Prompt: This tool could be valuable for legal professionals dealing with financial data, streamlining the process of gathering and interpreting information for cases involving corporate law, securities, or financial disputes.

Hume AI Unveils EVI 3: A speech-language model capable of understanding and generating almost any style of human voice, which could facilitate transcription services and virtual assistants in legal settings.

Manus AI Launches AI-Powered Slide Presentation Tool: While useful for legal professionals, caution is advised regarding data handling, as Manus' servers may be based in China.

Why This Matters for Legal Tech

For the legal tech industry, transparency in AI development is not just beneficial but necessary. Legal professionals need to trust that the AI tools they use are safe, reliable, and free from biases that could affect their work. By publishing detailed safety evaluations, Anthropic sets a standard for accountability and allows users to make informed decisions about adopting their technology.

Disclaimer: UseJunior is a legal tech company providing AI-powered solutions for legal professionals. This article is for informational purposes only and does not constitute legal advice.

About Steven Obiajulu and David McCalib

Steven Obiajulu

Steven Obiajulu is a former Ropes & Gray attorney with deep expertise in law and technology. Harvard Law '18 and MIT '13 graduate combining technical engineering background with legal practice to build accessible AI solutions for transactional lawyers.

David McCalib

David McCalib is an MIT-educated polypreneur and founder of Lab0, specializing in robotics, automation, and logistics. Brings extensive experience in product development, industrial engineering, and scaling innovative technology solutions across global markets.